|

|

| © Presses polytechniques et universitaires romandes, 2008 All rights reserved |

The present text evolved from course notes developed over a period of a

dozen years teaching undergraduates the basics of signal processing for

communications. The students had mostly a background in electrical

engineering, computer science or mathematics, and were typically

in their third year of studies at Ecole Polytechnique Fédérale de

Lausanne (EPFL), with an interest in communication systems. Thus, they

had been exposed to signals and systems, linear algebra, elements of

analysis (e.g. Fourier series) and some complex analysis, all of this

being fairly standard in an undergraduate program in engineering

sciences.

The notes having reached a certain maturity, including examples, solved

problems and exercises, we decided to turn them into an easy-to-use text on

signal processing, with a look at communications as an application. But

rather than writing one more book on signal processing, of which

many good ones already exist, we deployed the following variations,

which we think will make the book appealing as an undergraduate

text.

While mathematical rigor is not the emphasis, we made sure

to be precise, and thus the text is not approximate in its use

of mathematics. Remember, we think signal processing to be

mathematics applied to a fun topic, and not mathematics for its

own sake, nor a set of applications without foundations.

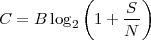

Certainly, the masterpiece in this regard is C. Shannon’s

1948 foundational paper on “The Mathematical Theory

of Communication”. It completely revolutionized the way

communication systems are designed and built, and, still today,

we mostly live in its legacy. Not surprisingly, one of the key

results of signal processing is the sampling theorem for bandlimited

functions (often attributed to Shannon, since it appears in

the above-mentioned paper), the theorem which single-handedly

enabled the digital revolution. To a mathematician, this is a simple

corollary to Fourier series, and he/she might suggest many other

ways to represent such particular functions. However, the strength

of the sampling theorem and its variations (e.g. oversampling

or quantization) is that it is an operational theorem, robust,

and applicable to actual signal acquisition and reconstruction

problems.

In order to showcase such powerful applications, the last chapter

is entirely devoted to developing an end-to-end communication

system, namely a modem for communicating digital information

(or bits) over an analog channel. This real-world application

(which is present in all modern communication devices, from

mobile phones to ADSL boxes) nicely brings together many of the

concepts and designs studied in the previous chapters. Being less formal, more abstract and application-driven seems almost like

moving simultaneously in several and possibly opposite directions, but we

believe we came up with the right balancing act. Ultimately, of course, the

readers and students are the judges!

A last and very important issue is the online access to the text

and supplementary material. A full html version together with the

unavoidable errata and other complementary material is available at

www.sp4comm.org. A solution manual is available to teachers upon

request.

As a closing word, we hope you will enjoy the text, and we welcome your

feedback. Let signal processing begin, and be fun!

Martin Vetterli and Paolo Prandoni

The current book is the result of several iterations of a yearly signal

processing undergraduate class and the authors would like to thank the

students in Communication Systems at EPFL who survived the early

versions of the manuscript and who greatly contributed with their feedback

to improve and refine the text along the years. Invaluable help was also

provided by the numerous teaching assistants who not only volunteered

constructive criticism but came up with a lot of the exercices which appear

at the end of each chapter (and their relative solutions). In no particular

order: Andrea Ridolfi provided insightful mathematical remarks and also

introduced us to the wonders of PsTricks while designing figures. Olivier Roy

and Guillermo Barrenetxea have been indefatigable ambassadors between

teaching and student bodies, helping shape exercices in a (hopefully) more

user-friendly form. Ivana Jovanovic, Florence Bénézit and Patrick

Vandewalle gave us a set of beautiful ideas and pointers thanks to their

recitations on choice signal processing topics. Luciano Sbaiz always lent an

indulgent ear and an insightful answer to all the doubts and worries

which plague scientific writers. We would also like to express our

personal gratitude to our families and friends for their patience and their

constant support; unfortunately, to do so in a proper manner, we

should resort to a lyricism which is sternly frowned upon in technical

textbooks and therefore we must confine ourselves to a simple “thank

you”.

Contents

1 What Is Digital Signal Processing?

1.1 Some History and Philosophy

1.1.1 Digital Signal Processing under the Pyramids

1.1.2 The Hellenic Shift to Analog Processing

1.1.3 “Gentlemen: calculemus!”

1.2 Discrete Time

1.3 Discrete Amplitude

1.4 Communication Systems

1.5 How to Read this Book

1.6 Further Reading

2 Discrete-Time Signals

2.1 Basic Definitions

2.1.1 The Discrete-Time Abstraction

2.1.2 Basic Signals

2.1.3 Digital Frequency

2.1.4 Elementary Operators

2.1.5 The Reproducing Formula

2.1.6 Energy and Power

2.2 Classes of Discrete-Time Signals

2.2.1 Finite-Length Signals

2.2.2 Infinite-Length Signals

2.3 Examples

2.4 Further Reading

2.5 Exercises

3 Signals and Hilbert Spaces

3.1 Euclidean Geometry: a Review

3.2 From Vector Spaces to Hilbert Spaces

3.2.1 The Recipe for Hilbert Space

3.2.2 Examples of Hilbert Spaces

3.2.3 Inner Products and Distances

3.3 Subspaces, Bases, Projections

3.3.1 Definitions

3.3.2 Properties of Orthonormal Bases

3.3.3 Examples of Bases

3.4 Signal Spaces Revisited

3.4.1 Finite-Length Signals

3.4.2 Periodic Signals

3.4.3 Infinite Sequences

3.5 Further Reading

3.6 Exercises

4 Fourier Analysis

4.1 Preliminaries

4.1.1 Complex Exponentials

4.1.2 Complex Oscillations? Negative Frequencies?

4.2 The DFT (Discrete Fourier Transform)

4.2.1 Matrix Form

4.2.2 Explicit Form

4.2.3 Physical Interpretation

4.3 The DFS (Discrete Fourier Series)

4.4 2

4.4.1 The DTFT as the Limit of a DFS

4.4.2 The DTFT as a Formal Change of Basis

4.5 Relationships between Transforms

4.6 Fourier Transform Properties

4.6.1 DTFT Properties

4.6.2 DFS Properties

4.6.3 DFT Properties

4.7 Fourier Analysis in Practice

4.7.1 Plotting Spectral Data

4.7.2 Computing the Transform: the FFT

4.7.3 Cosmetics: Zero-Padding

4.7.4 Spectral Analysis

4.8 Time-Frequency Analysis

4.8.1 The Spectrogram

4.8.2 The Uncertainty Principle

4.9 Digital Frequency vs. Real Frequency

4.10 Examples

4.11 Further Reading

4.12 Exercises

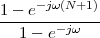

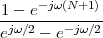

5 Discrete-Time Filters

5.1 Linear Time-Invariant Systems

5.2 Filtering in the Time Domain

5.2.1 The Convolution Operator

5.2.2 Properties of the Impulse Response

5.3 Filtering by Example – Time Domain

5.3.1 FIR Filtering

5.3.2 IIR Filtering

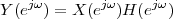

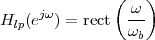

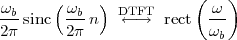

5.4 Filtering in the Frequency Domain

5.4.1 LTI “Eigenfunctions”

5.4.2 The Convolution and Modulation Theorems

5.4.3 Properties of the Frequency Response

5.5 Filtering by Example – Frequency Domain

5.6 Ideal Filters

5.7 Realizable Filters

5.7.1 Constant-Coefficient Difference Equations

5.7.2 The Algorithmic Nature of CCDEs

5.7.3 Filter Analysis and Design

5.8 Examples

5.9 Further Reading

5.10 Exercises

6 The Z-Transform

6.1 Filter Analysis

6.1.1 Solving CCDEs

6.1.2 Causality

6.1.3 Region of Convergence

6.1.4 ROC and System Stability

6.1.5 ROC of Rational Transfer Functions

and Filter Stability

6.2 The Pole-Zero Plot

6.2.1 Pole-Zero Patterns

6.2.2 Pole-Zero Cancellation

6.2.3 Sketching the Transfer Function

from the Pole-Zero Plot

6.3 Filtering by Example – Z-Transform

6.4 Examples

6.5 Further Reading

6.6 Exercises

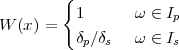

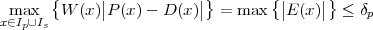

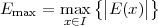

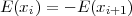

7 Filter Design

7.1 Design Fundamentals

7.1.1 FIR versus IIR

7.1.2 Filter Specifications and Tradeoffs

7.2 FIR Filter Design

7.2.1 FIR Filter Design by Windowing

7.2.2 Minimax FIR Filter Design

7.3 IIR Filter Design

7.3.1 All-Time Classics

7.4 Filter Structures

7.4.1 FIR Filter Structures

7.4.2 IIR Filter Structures

7.4.3 Some Remarks on Numerical Stability

7.5 Filtering and Signal Classes

7.5.1 Filtering of Finite-Length Signals

7.5.2 Filtering of Periodic Sequences

7.6 Examples

7.7 Further Reading

7.8 Exercises

8 Stochastic Signal Processing

8.1 Random Variables

8.2 Random Vectors

8.3 Random Processes

8.4 Spectral Representation

of Stationary Random Processes

8.4.1 Power Spectral Density

8.4.2 PSD of a Stationary Process

8.4.3 White Noise

8.5 Stochastic Signal Processing

8.6 Examples

8.7 Further Reading

8.8 Exercises

9 Interpolation and Sampling

9.1 Preliminaries and Notation

9.2 Continuous-Time Signals

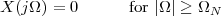

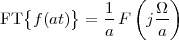

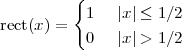

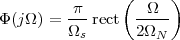

9.3 Bandlimited Signals

9.4 Interpolation

9.4.1 Local Interpolation

9.4.2 Polynomial Interpolation

9.4.3 Sinc Interpolation

9.5 The Sampling Theorem

9.6 Aliasing

9.6.1 Non-Bandlimited Signals

9.6.2 Aliasing: Intuition

9.6.3 Aliasing: Proof

9.6.4 Aliasing: Examples

9.7 Examples

9.8 Appendix

9.9 Further Reading

9.10 Exercises

10 A/D and D/A Conversions

10.1 Quantization

10.1.1 Uniform Scalar Quantization

10.1.2 Advanced Quantizers

10.2 A/D Conversion

10.3 D/A Conversion

10.4 Examples

10.5 Further Reading

10.6 Exercises

11 Multirate Signal Processing

11.1 Downsampling

11.1.1 Properties of the Downsampling Operator

11.1.2 Frequency-Domain Representation

11.1.3 Examples

11.1.4 Downsampling and Filtering

11.2 Upsampling

11.2.1 Upsampling and Interpolation

11.3 Rational Sampling Rate Changes

11.4 Oversampling

11.4.1 Oversampled A/D Conversion

11.4.2 Oversampled D/A Conversion

11.5 Examples

11.6 Further Reading

11.7 Exercises

12 Design of a Digital Communication System

12.1 The Communication Channel

12.1.1 The AM Radio Channel

12.1.2 The Telephone Channel

12.2 Modem Design: The Transmitter

12.2.1 Digital Modulation and the Bandwidth Constraint

12.2.2 Signaling Alphabets and the Power Constraint

12.3 Modem Design: the Receiver

12.3.1 Hilbert Demodulation

12.3.2 The Effects of the Channel

12.4 Adaptive Synchronization

12.4.1 Carrier Recovery

12.4.2 Timing Recovery

12.5 Further Reading

12.6 Exercises

© Presses polytechniques et universitaires romandes, 2008

All rights reservedPreface

Spring 2008, Paris and GrandvauxAcknowledgements

© Presses polytechniques et universitaires romandes, 2008

All rights reserved

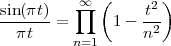

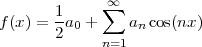

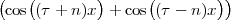

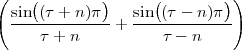

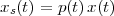

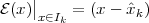

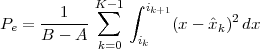

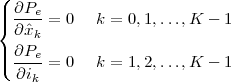

A signal, technically yet generally speaking, is a a formal description of a phenomenon evolving over time or space; by signal processing we denote any manual or “mechanical” operation which modifies, analyzes or otherwise manipulates the information contained in a signal. Consider the simple example of ambient temperature: once we have agreed upon a formal model for this physical variable – Celsius degrees, for instance – we can record the evolution of temperature over time in a variety of ways and the resulting data set represents a temperature “signal”. Simple processing operations can then be carried out even just by hand: for example, we can plot the signal on graph paper as in Figure 1.1, or we can compute derived parameters such as the average temperature in a month.

Conceptually, it is important to note that signal processing operates on an abstract representation of a physical quantity and not on the quantity itself. At the same time, the type of abstract representation we choose for the physical phenomenon of interest determines the nature of a signal processing unit. A temperature regulation device, for instance, is not a signal processing system as a whole. The device does however contain a signal processing core in the feedback control unit which converts the instantaneous measure of the temperature into an ON/OFF trigger for the heating element. The physical nature of this unit depends on the temperature model: a simple design is that of a mechanical device based on the dilation of a metal sensor; more likely, the temperature signal is a voltage generated by a thermocouple and in this case the matched signal processing unit is an operational amplifier.

Finally, the adjective “digital” derives from digitus, the Latin word for finger: it concisely describes a world view where everything can be ultimately represented as an integer number. Counting, first on one’s fingers and then in one’s head, is the earliest and most fundamental form of abstraction; as children we quickly learn that counting does indeed bring disparate objects (the proverbial “apples and oranges”) into a common modeling paradigm, i.e. their cardinality. Digital signal processing is a flavor of signal processing in which everything including time is described in terms of integer numbers; in other words, the abstract representation of choice is a one-size-fit-all countability. Note that our earlier “thought experiment” about ambient temperature fits this paradigm very naturally: the measuring instants form a countable set (the days in a month) and so do the measures themselves (imagine a finite number of ticks on the thermometer’s scale). In digital signal processing the underlying abstract representation is always the set of natural numbers regardless of the signal’s origins; as a consequence, the physical nature of the processing device will also always remain the same, that is, a general digital (micro)processor. The extraordinary power and success of digital signal processing derives from the inherent universality of its associated “world view”.

Probably the earliest recorded example of digital signal processing dates back to the 25th century BC. At the time, Egypt was a powerful kingdom reaching over a thousand kilometers south of the Nile’s delta. For all its latitude, the kingdom’s populated area did not extend for more than a few kilometers on either side of the Nile; indeed, the only inhabitable areas in an otherwise desert expanse were the river banks, which were made fertile by the yearly flood of the river. After a flood, the banks would be left covered with a thin layer of nutrient-rich silt capable of supporting a full agricultural cycle. The floods of the Nile, however, were(1) a rather capricious meteorological phenomenon, with scant or absent floods resulting in little or no yield from the land. The pharaohs quickly understood that, in order to preserve stability, they would have to set up a grain buffer with which to compensate for the unreliability of the Nile’s floods and prevent potential unrest in a famished population during “dry” years. As a consequence, studying and predicting the trend of the floods (and therefore the expected agricultural yield) was of paramount importance in order to determine the operating point of a very dynamic taxation and redistribution mechanism. The floods of the Nile were meticulously recorded by an array of measuring stations called “nilometers” and the resulting data set can indeed be considered a full-fledged digital signal defined on a time base of twelve months. The Palermo Stone, shown in the left panel of Figure 1.2, is a faithful record of the data in the form of a table listing the name of the current pharaoh alongside the yearly flood level; a more modern representation of an flood data set is shown on the left of the figure: bar the references to the pharaohs, the two representations are perfectly equivalent. The Nile’s behavior is still an active area of hydrological research today and it would be surprising if the signal processing operated by the ancient Egyptians on their data had been of much help in anticipating for droughts. Yet, the Palermo Stone is arguably the first recorded digital signal which is still of relevance today.

|

|

“Digital” representations of the world such as those depicted by the Palermo

Stone are adequate for an environment in which quantitative problems are

simple: counting cattle, counting bushels of wheat, counting days and so on.

As soon as the interaction with the world becomes more complex, so

necessarily do the models used to interpret the world itself. Geometry, for

instance, is born of the necessity of measuring and subdividing land

property. In the act of splitting a certain quantity into parts we can

already see the initial difficulties with an integer-based world view

;(2)

yet, until the Hellenic period, western civilization considered natural

numbers and their ratios all that was needed to describe nature in an

operational fashion. In the 6th century BC, however, a devastated

Pythagoras realized that the the side and the diagonal of a square are

incommensurable, i.e. that  is not a simple fraction. The discovery of

what we now call irrational numbers “sealed the deal” on an abstract model

of the world that had already appeared in early geometric treatises and

which today is called the continuum. Heavily steeped in its geometric roots

(i.e. in the infinity of points in a segment), the continuum model postulates

that time and space are an uninterrupted flow which can be divided

arbitrarily many times into arbitrarily (and infinitely) small pieces. In signal

processing parlance, this is known as the “analog” world model and,

in this model, integer numbers are considered primitive entities, as

rough and awkward as a set of sledgehammers in a watchmaker’s

shop.

is not a simple fraction. The discovery of

what we now call irrational numbers “sealed the deal” on an abstract model

of the world that had already appeared in early geometric treatises and

which today is called the continuum. Heavily steeped in its geometric roots

(i.e. in the infinity of points in a segment), the continuum model postulates

that time and space are an uninterrupted flow which can be divided

arbitrarily many times into arbitrarily (and infinitely) small pieces. In signal

processing parlance, this is known as the “analog” world model and,

in this model, integer numbers are considered primitive entities, as

rough and awkward as a set of sledgehammers in a watchmaker’s

shop.

In the continuum, the infinitely big and the infinitely small dance together in complex patterns which often defy our intuition and which required almost two thousand years to be properly mastered. This is of course not the place to delve deeper into this extremely fascinating epistemological domain; suffice it to say that the apparent incompatibility between the digital and the analog world views appeared right from the start (i.e. from the 5th century BC) in Zeno’s works; we will appreciate later the immense import that this has on signal processing in the context of the sampling theorem.

Zeno’s paradoxes are well known and they underscore this unbridgeable gap between our intuitive, integer-based grasp of the world and a model of the world based on the continuum. Consider for instance the dichotomy paradox; Zeno states that if you try to move along a line from point A to point B you will never in fact be able to reach your destination. The reasoning goes as follows: in order to reach B, you will have to first go through point C, which is located mid-way between A and B; but, even before you reach C, you will have to reach D, which is the midpoint between A and C; and so on ad infinitum. Since there is an infinity of such intermediate points, Zeno argues, moving from A to B requires you to complete an infinite number of tasks, which is humanly impossible. Zeno of course was well aware of the empirical evidence to the contrary but he was brilliantly pointing out the extreme trickery of a model of the world which had not yet formally defined the concept of infinity. The complexity of the intellectual machinery needed to solidly counter Zeno’s argument is such that even today the paradox is food for thought. A first-year calculus student may be tempted to offhandedly dismiss the problem by stating

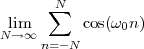

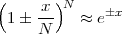

| (1.1) |

but this is just a void formalism begging the initial question if the underlying notion of the continuum is not explicitly worked out.(3) In reality Zeno’s paradoxes cannot be “solved”, since they cease to be paradoxes once the continuum model is fully understood.

The two competing models for the world, digital and analog, coexisted quite peacefully for quite a few centuries, one as the tool of the trade for farmers, merchants, bankers, the other as an intellectual pursuit for mathematicians and astronomers. Slowly but surely, however, the increasing complexity of an expanding world spurred the more practically-oriented minds to pursue science as a means to solve very tangible problems besides describing the motion of the planets. Calculus, brought to its full glory by Newton and Leibnitz in the 17th century, proved to be an incredibly powerful tool when applied to eminently practical concerns such as ballistics, ship routing, mechanical design and so on; such was the faith in the power of the new science that Leibnitz envisioned a not-too-distant future in which all human disputes, including problems of morals and politics, could be worked out with pen and paper: “gentlemen, calculemus”. If only.

As Cauchy unsurpassably explained later, everything in calculus is a limit and therefore everything in calculus is a celebration of the power of the continuum. Still, in order to apply the calculus machinery to the real world, the real world has to be modeled as something calculus understands, namely a function of a real (i.e. continuous) variable. As mentioned before, there are vast domains of research well behaved enough to admit such an analytical representation; astronomy is the first one to come to mind, but so is ballistics, for instance. If we go back to our temperature measurement example, however, we run into the first difficulty of the analytical paradigm: we now need to model our measured temperature as a function of continuous time, which means that the value of the temperature should be available at any given instant and not just once per day. A “temperature function” as in Figure 1.3 is quite puzzling to define if all we have (and if all we can have, in fact) is just a set of empirical measurements reasonably spaced in time. Even in the rare cases in which an analytical model of the phenomenon is available, a second difficulty arises when the practical application of calculus involves the use of functions which are only available in tabulated form. The trigonometric and logarithmic tables are a typical example of how a continuous model needs to be made countable again in order to be put to real use. Algorithmic procedures such as series expansions and numerical integration methods are other ways to bring the analytic results within the realm of the practically computable. These parallel tracks of scientific development, the “Platonic” ideal of analytical results and the slide rule reality of practitioners, have coexisted for centuries and they have found their most durable mutual peace in digital signal processing, as will appear shortly.

One of the fundamental problems in signal processing is to obtain a permanent record of the signal itself. Think back of the ambient temperature example, or of the floods of the Nile: in both cases a description of the phenomenon was gathered by a naive sampling operation, i.e. by measuring the quantity of interest at regular intervals. This is a very intuitive process and it reflects the very natural act of “looking up the current value and writing it down”. Manually this operation is clearly quite slow but it is conceivable to speed it up mechanically so as to obtain a much larger number of measurements per unit of time. Our measuring machine, however fast, still will never be able to take an infinite amount of samples in a finite time interval: we are back in the clutches of Zeno’s paradoxes and one would be tempted to conclude that a true analytical representation of the signal is impossible to obtain.

At the same time, the history of applied science provides us with many examples of recording machines capable of providing an “analog” image of a physical phenomenon. Consider for instance a thermograph: this is a mechanical device in which temperature deflects an ink-tipped metal stylus in contact with a slowly rolling paper-covered cylinder. Thermographs like the one sketched in Figure 1.4 are still currently in use in some simple weather stations and they provide a chart in which a temperature function as in Figure 1.3 is duly plotted. Incidentally, the principle is the same in early sound recording devices: Edison’s phonograph used the deflection of a steel pin connected to a membrane to impress a “continuous-time” sound wave as a groove on a wax cylinder. The problem with these analog recordings is that they are not abstract signals but a conversion of a physical phenomenon into another physical phenomenon: the temperature, for instance, is converted into the amount of ink on paper while the sound pressure wave is converted into the physical depth of the groove. The advent of electronics did not change the concept: an audio tape, for instance, is obtained by converting a pressure wave into an electrical current and then into a magnetic deflection. The fundamental consequence is that, for analog signals, a different signal processing system needs to be designed explicitly for each specific form of recording.

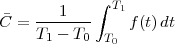

Consider for instance the problem of computing the average temperature

over a certain time interval. Calculus provides us with the exact answer  if

we know the elusive “temperature function” f(t) over an interval [T0,T1]

(see Figure 1.5, top panel):

if

we know the elusive “temperature function” f(t) over an interval [T0,T1]

(see Figure 1.5, top panel):

| (1.2) |

We can try to reproduce the integration with a “machine” adapted to the particular representation of temperature we have at hand: in the case of the thermograph, for instance, we can use a planimeter as in Figure 1.6, a manual device which computes the area of a drawn surface; in a more modern incarnation in which the temperature signal is given by a thermocouple, we can integrate the voltage with the RC network in Figure 1.7. In both cases, in spite of the simplicity of the problem, we can instantly see the practical complications and the degree of specialization needed to achieve something as simple as an average for an analog signal.

Now consider the case in which all we have is a set of daily measurements c1,c2,…,cD as in Figure 1.1; the “average” temperature of our measurements over D days is simply:

| (1.3) |

(as shown in the bottom panel of Figure 1.5) and this is an elementary sum

of D terms which anyone can carry out by hand and which does not depend

on how the measurements have been obtained: wickedly simple! So,

obviously, the question is: “How different (if at all) is Ĉ from  ?” In order to

find out we can remark that if we accept the existence of a temperature

function f(t) then the measured values cn are samples of the function taken

one day apart:

?” In order to

find out we can remark that if we accept the existence of a temperature

function f(t) then the measured values cn are samples of the function taken

one day apart:

|

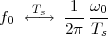

(where Ts is the duration of a day). In this light, the sum (1.3) is just the Riemann approximation to the integral in (1.2) and the question becomes an assessment on how good an approximation that is. Another way to look at the problem is to ask ourselves how much information we are discarding by only keeping samples of a continuous-time function.

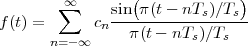

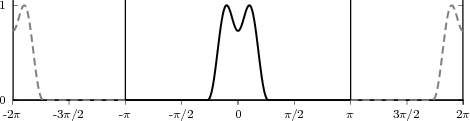

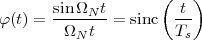

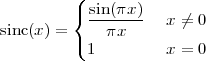

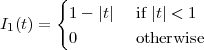

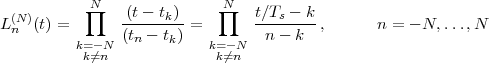

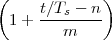

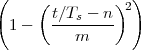

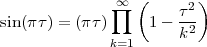

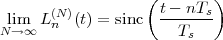

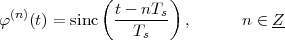

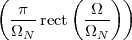

The answer, which we will study in detail in Chapter 9, is that in fact the continuous-time function and the set of samples are perfectly equivalent representations – provided that the underlying physical phenomenon “doesn’t change too fast”. Let us put the proviso aside for the time being and concentrate instead on the good news: first, the analog and the digital world can perfectly coexist; second, we actually possess a constructive way to move between worlds: the sampling theorem, discovered and rediscovered by many at the beginning of the 20th century(4) , tells us that the continuous-time function can be obtained from the samples as

| (1.4) |

So, in theory, once we have a set of measured values, we can build the continuous-time representation and use the tools of calculus. In reality things are even simpler: if we plug (1.4) into our analytic formula for the average (1.2) we can show that the result is a simple sum like (1.3). So we don’t need to explicitly go “through the looking glass” back to continuous-time: the tools of calculus have a discrete-time equivalent which we can use directly.

The equivalence between the discrete and continuous representations only holds for signals which are sufficiently “slow” with respect to how fast we sample them. This makes a lot of sense: we need to make sure that the signal does not do “crazy” things between successive samples; only if it is smooth and well behaved can we afford to have such sampling gaps. Quantitatively, the sampling theorem links the speed at which we need to repeatedly measure the signal to the maximum frequency contained in its spectrum. Spectra are calculated using the Fourier transform which, interestingly enough, was originally devised as a tool to break periodic functions into a countable set of building blocks. Everything comes together.

While it appears that the time continuum has been tamed by the sampling theorem, we are nevertheless left with another pesky problem: the precision of our measurements. If we model a phenomenon as an analytical function, not only is the argument (the time domain) a continuous variable but so is the function’s value (the codomain); a practical measurement, however, will never achieve an infinite precision and we have another paradox on our hands. Consider our temperature example once more: we can use a mercury thermometer and decide to write down just the number of degrees; maybe we can be more precise and note the half-degrees as well; with a magnifying glass we could try to record the tenths of a degree – but we would most likely have to stop there. With a more sophisticated thermocouple we could reach a precision of one hundredth of a degree and possibly more but, still, we would have to settle on a maximum number of decimal places. Now, if we know that our measures have a fixed number of digits, the set of all possible measures is actually countable and we have effectively mapped the codomain of our temperature function onto the set of integer numbers. This process is called quantization and it is the method, together with sampling, to obtain a fully digital signal.

In a way, quantization deals with the problem of the continuum in a much “rougher” way than in the case of time: we simply accept a loss of precision with respect to the ideal model. There is a very good reason for that and it goes under the name of noise. The mechanical recording devices we just saw, such as the thermograph or the phonograph, give the illusion of analytical precision but are in practice subject to severe mechanical limitations. Any analog recording device suffers from the same fate and even if electronic circuits can achieve an excellent performance, in the limit the unavoidable thermal agitation in the components constitutes a noise floor which limits the “equivalent number of digits”. Noise is a fact of nature that cannot be eliminated, hence our acceptance of a finite (i.e. countable) precision.

Noise is not just a problem in measurement but also in processing. Figure 1.8 shows the two archetypal types of analog and digital computing devices; while technological progress may have significantly improved the speed of each, the underlying principles remain the same for both. An analog signal processing system, much like the slide rule, uses the displacement of physical quantities (gears or electric charge) to perform its task; each element in the system, however, acts as a source of noise so that complex or, more importantly, cheap designs introduce imprecisions in the final result (good slide rules used to be very expensive). On the other hand the abacus, working only with integer arithmetic, is a perfectly precise machine(5) even if it’s made with rocks and sticks. Digital signal processing works with countable sequences of integers so that in a digital architecture no processing noise is introduced. A classic example is the problem of reproducing a signal. Before mp3 existed and file sharing became the bootlegging method of choice, people would “make tapes”. When someone bought a vinyl record he would allow his friends to record it on a cassette; however, a “peer-to-peer” dissemination of illegally taped music never really took off because of the “second generation noise”, i.e. because of the ever increasing hiss that would appear in a tape made from another tape. Basically only first generation copies of the purchased vinyl were acceptable quality on home equipment. With digital formats, on the other hand, duplication is really equivalent to copying down a (very long) list of integers and even very cheap equipment can do that without error.

Finally, a short remark on terminology. The amplitude accuracy of a set of samples is entirely dependent on the processing hardware; in current parlance this is indicated by the number of bits per sample of a given representation: compact disks, for instance, use 16 bits per sample while DVDs use 24. Because of its “contingent” nature, quantization is almost always ignored in the core theory of signal processing and all derivations are carried out as if the samples were real numbers; therefore, in order to be precise, we will almost always use the term discrete-time signal processing and leave the label “digital signal processing” (DSP) to the world of actual devices. Neglecting quantization will allow us to obtain very general results but care must be exercised: in the practice, actual implementations will have to deal with the effects of finite precision, sometimes with very disruptive consequences.

Signals in digital form provide us with a very convenient abstract representation which is both simple and powerful; yet this does not shield us from the need to deal with an “outside” world which is probably best modeled by the analog paradigm. Consider a mundane act such as placing a call on a cell phone, as in Figure 1.9: humans are analog devices after all and they produce analog sound waves; the phone converts these into digital format, does some digital processing and then outputs an analog electromagnetic wave on its antenna. The radio wave travels to the base station in which it is demodulated, converted to digital format to recover the voice signal. The call, as a digital signal, continues through a switch and then is injected into an optical fiber as an analog light wave. The wave travels along the network and then the process is inverted until an analog sound wave is generated by the loudspeaker at the receiver’s end.

Communication systems are in general a prime example of sophisticated interplay between the digital and the analog world: while all the processing is undoubtedly best done digitally, signal propagation in a medium (be it the the air, the electromagnetic spectrum or an optical fiber) is the domain of differential (rather than difference) equations. And yet, even when digital processing must necessarily hand over control to an analog interface, it does so in a way that leaves no doubt as to who’s boss, so to speak: for, instead of transmitting an analog signal which is the reconstructed “real” function as per (1.4), we always transmit an analog signal which encodes the digital representation of the data. This concept is really at the heart of the “digital revolution” and, just like in the cassette tape example, it has to do with noise.

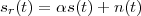

Imagine an analog voice signal s(t) which is transmitted over a (long) telephone line; a simplified description of the received signal is

|

where the parameter α, with α < 1, is the attenuation that the signal incurs and where n(t) is the noise introduced by the system. The noise function is of obviously unknown (it is often modeled as a Gaussian process, as we will see) and so, once it’s added to the signal, it’s impossible to eliminate it. Because of attenuation, the receiver will include an amplifier with gain G to restore the voice signal to its original level; with G = 1∕α we will have

|

Unfortunately, as it appears, in order to regenerate the analog signal we also have amplified the noise G times; clearly, if G is large (i.e. if there is a lot of attenuation to compensate for) the voice signal end up buried in noise. The problem is exacerbated if many intermediate amplifiers have to be used in cascade, as is the case in long submarine cables.

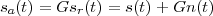

Consider now a digital voice signal, that is, a discrete-time signal whose samples have been quantized over, say, 256 levels: each sample can therefore be represented by an 8-bit word and the whole speech signal can be represented as a very long sequence of binary digits. We now build an analog signal as a two-level signal which switches for a few instants between, say, -1 V and +1 V for every “0” and “1” bit in the sequence respectively. The received signal will still be

|

but, to regenerate it, instead of linear amplification we can use nonlinear thresholding:

|

Figure 1.10 clearly shows that as long as the magnitude of the noise is less than α the two-level signal can be regenerated perfectly; furthermore, the regeneration process can be repeated as many times as necessary with no overall degradation.

|

|

In reality of course things are a little more complicated and, because of the nature of noise, it is impossible to guarantee that some of the bits won’t be corrupted. The answer is to use error correcting codes which, by introducing redundancy in the signal, make the sequence of ones and zeros robust to the presence of errors; a scratched CD can still play flawlessly because of the Reed-Solomon error correcting codes used for the data. Data coding is the core subject of Information Theory and it is behind the stellar performance of modern communication systems; interestingly enough, the most successful codes have emerged from group theory, a branch of mathematics dealing with the properties of closed sets of integer numbers. Integers again! Digital signal processing and information theory have been able to join forces so successfully because they share a common data model (the integer) and therefore they share the same architecture (the processor). Computer code written to implement a digital filter can dovetail seamlessly with code written to implement error correction; linear processing and nonlinear flow control coexist naturally.

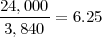

A simple example of the power unleashed by digital signal processing is the performance of transatlantic cables. The first operational telegraph cable from Europe to North America was laid in 1858 (see Fig. 1.11); it worked for about a month before being irrecoverably damaged.(6) From then on, new materials and rapid progress in electrotechnics boosted the performance of each subsequent cable; the key events in the timeline of transatlantic communications are shown in Table 1.1. The first transatlantic telephone cable was laid in 1956 and more followed in the next two decades with increasing capacity due to multicore cables and better repeaters; the invention of the echo canceler further improved the number of voice channels for already deployed cables. In 1968 the first experiments in PCM digital telephony were successfully completed and the quantum leap was around the corner: by the end of the 70’s cables were carrying over one order of magnitude more voice channels than in the 60’s. Finally, the deployment of the first fiber optic cable in 1988 opened the door to staggering capacities (and enabled the dramatic growth of the Internet).

Finally, it’s impossible not to mention the advent of data compression in this brief review of communication landmarks. Again, digital processing allows the coexistence of standard processing with sophisticated decision logic; this enables the implementation of complex data-dependent compression techniques and the inclusion of psychoperceptual models in order to match the compression strategy to the characteristics of the human visual or auditory system. A music format such as mp3 is perhaps the first example to come to mind but, as shown in Table 1.2, all communication domains have been greatly enhanced by the gains in throughput enabled by data compression.

|

|

This book tries to build a largely self-contained development of digital signal processing theory from within discrete time, while the relationship to the analog model of the world is tackled only after all the fundamental “pieces of the puzzle” are already in place. Historically, modern discrete-time processing started to consolidate in the 50’s when mainframe computers became powerful enough to handle the effective simulations of analog electronic networks. By the end of the 70’s the discipline had by all standards reached maturity; so much so, in fact, that the major textbooks on the subject still in use today had basically already appeared by 1975. Because of its ancillary origin with respect to the problems of that day, however, discrete-time signal processing has long been presented as a tributary to much more established disciplines such as Signals and Systems. While historically justifiable, that approach is no longer tenable today for three fundamental reasons: first, the pervasiveness of digital storage for data (from CDs to DVDs to flash drives) implies that most devices today are designed for discrete-time signals to start with; second, the trend in signal processing devices is to move the analog-to-digital and digital-to-analog converters at the very beginning and the very end of the processing chain so that even “classically analog” operations such as modulation and demodulation are now done in discrete-time; third, the availability of numerical packages like Matlab provides a testbed for signal processing experiments (both academically and just for fun) which is far more accessible and widespread than an electronics lab (not to mention affordable).

The idea therefore is to introduce discrete-time signals as a self-standing entity (Chap. 2), much in the natural way of a temperature sequence or a series of flood measurements, and then to remark that the mathematical structures used to describe discrete-time signals are one and the same with the structures used to describe vector spaces (Chap. 3). Equipped with the geometrical intuition afforded to us by the concept of vector space, we can proceed to “dissect” discrete-time signals with the Fourier transform, which turns out to be just a change of basis (Chap. 4). The Fourier transform opens the passage between the time domain and the frequency domain and, thanks to this dual understanding, we are ready to tackle the concept of processing as performed by discrete-time linear systems, also known as filters (Chap. 5). Next comes the very practical task of designing a filter to order, with an eye to the subtleties involved in filter implementation; we will mostly consider FIR filters, which are unique to discrete time (Chaps 6 and 7). After a brief excursion in the realm of stochastic sequences (Chap. 8) we will finally build a bridge between our discrete-time world and the continuous-time models of physics and electronics with the concepts of sampling and interpolation (Chap. 9); and digital signals will be completely accounted for after a study of quantization (Chap. 10). We will finally go back to purely discrete time for the final topic, multirate signal processing (Chap. 11), before putting it all together in the final chapter: the analysis of a commercial voiceband modem (Chap. 12).

The Bible of digital signal processing was and remains Discrete-Time Signal Processing, by A. V. Oppenheim and R. W. Schafer (Prentice-Hall, last edition in 1999); exceedingly exhaustive, it is a must-have reference. For background in signals and systems, the eponimous Signals and Systems, by Oppenheim, Willsky and Nawab (Prentice Hall, 1997) is a good start.

Most of the historical references mentioned in this introduction can be integrated by simple web searches. Other comprehensive books on digital signal processing include S. K. Mitra’s Digital Signal Processing (McGraw Hill, 2006) and Digital Signal Processing, by J. G. Proakis and D. K. Manolakis (Prentis Hall 2006). For a fascinating excursus on the origin of calculus, see D. Hairer and G. Wanner, Analysis by its History (Springer-Verlag, 1996). A more than compelling epistemological essay on the continuum is Everything and More, by David Foster Wallace (Norton, 2003), which manages to be both profound and hilarious in an unprecedented way.

Finally, the very prolific literature on current signal processing research is published mainly by the Institute of Electronics and Electrical Engineers (IEEE) in several of its transactions such as IEEE Transactions on Signal Processing, IEEE Transactions on Image Processing and IEEE Transactions on Speech and Audio Processing.

| © Presses polytechniques et universitaires romandes, 2008 All rights reserved |

In this Chapter we define more formally the concept of the discrete-time signal and establish an associated basic taxonomy used in the remainder of the book. Historically, discrete-time signals have often been introduced as the discretized version of continuous-time signals, i.e. as the sampled values of analog quantities, such as the voltage at the output of an analog circuit; accordingly, many of the derivations proceeded within the framework of an underlying continuous-time reality. In truth, the discretization of analog signals is only part of the story, and a rather minor one nowadays. Digital signal processing, especially in the context of communication systems, is much more concerned with the synthesis of discrete-time signals rather than with sampling. That is why we choose to introduce discrete-time signals from an abstract and self-contained point of view.

A discrete-time signal is a complex-valued sequence. Remember that a

sequence is defined as a complex-valued function of an integer index n, with

n  Z; as such, it is a two-sided, infinite collection of values. A sequence can

be defined analytically in closed form, as for example:

Z; as such, it is a two-sided, infinite collection of values. A sequence can

be defined analytically in closed form, as for example:

![x[n] = (n mod 11) - 5](livre24x.png) | (2.1) |

shown as the “triangular” waveform plotted in Figure 2.1; or

![j πn

x [n] = e 20](livre25x.png) | (2.2) |

which is a complex exponential of period 40 samples, plotted in Figure 2.2. An example of a sequence drawn from the real world is

![x[n ] = The average Dow -Jones index in year n](livre26x.png) | (2.3) |

plotted in Figure 2.3 from year 1900 to 2002. Another example, this time of a random sequence, is

![x[n] = the n-th output of a random source U(- 1,1)](livre27x.png) | (2.4) |

a realization of which is plotted in Figure 2.4.

A few notes are in order:

While analytical forms of discrete-time signals such as the ones above are useful to illustrate the key points of signal processing and are absolutely necessary in the mathematical abstractions which follow, they are non-etheless just that, abstract examples. How does the notion of a discrete-time signal relate to the world around us? A discrete-time signal, in fact, captures our necessarily limited ability to take repeated accurate measurements of a physical quantity. We might be keeping track of the stock market index at the end of each day to draw a pencil and paper chart; or we might be measuring the voltage level at the output of a microphone 44,100 times per second (obviously not by hand!) to record some music via the computer’s soundcard. In both cases we need “time to write down the value” and are therefore forced to neglect everything that happens between measuring times. This “look and write down” operation is what is normally referred to as sampling. There are real-world phenomena which lend themselves very naturally and very intuitively to a discrete-time representation: the daily Dow-Jones index, for example, solar spots, yearly floods of the Nile, etc. There seems to be no irrecoverable loss in this neglect of intermediate values. But what about music, or radio waves? At this point it is not altogether clear from an intuitive point of view how a sampled measurement of these phenomena entail no loss of information. The mathematical proof of this will be shown in detail when we study the sampling theorem; for the time being let us say that “the proof of the cake is in the eating”: just listen to your favorite CD!

The important point to make here is that, once a real-world signal is converted to a discrete-time representation, the underlying notion of “time between measurements” becomes completely abstract. All we are left with is a sequence of numbers, and all signal processing manipulations, with their intended results, are independent of the way the discrete-time signal is obtained. The power and the beauty of digital signal processing lies in part with its invariance with respect to the underlying physical reality. This is in stark contrast with the world of analog circuits and systems, which have to be realized in a version specific to the physical nature of the input signals.

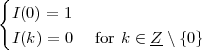

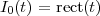

The following sequences are fundamental building blocks for the theory of signal processing.

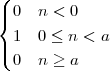

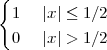

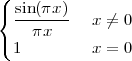

Impulse. The discrete-time impulse (or discrete-time delta function) is potentially the simplest discrete-time signal; it is shown in Figure 2.5(a) and is defined as

![{

δ[n ] = 1 n = 0

0 n ⁄= 0](livre34x.png) | (2.5) |

Unit Step. The discrete-time unit step is shown in Figure 2.5(b) and is defined by the following expression:

![{

1 n ≥ 0

u[n] = 0 n < 0](livre35x.png) | (2.6) |

The unit step can be obtained via a discrete-time integration of the impulse (see eq. (2.16)).

Exponential Decay. The discrete-time exponential decay is shown in Figure 2.5(c) and is defined as

![n

x[n] = a u [n], a ∈ C, |a| < 1](livre36x.png) | (2.7) |

The exponential decay is, as we will see, the free response of a discrete-time first order recursive filter. Exponential sequences are well-behaved only for values of a less than one in magnitude; sequences in which |a| > 1 are unbounded and represent an unstable behavior (their energy and power are both infinite).

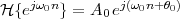

Complex Exponential. The discrete-time complex exponential has already been shown in Figure 2.2 and is defined as

![j(ω n+φ)

x[n ] = e 0](livre37x.png) | (2.8) |

Special cases of the complex exponential are the real-valued discrete-time sinusoidal oscillations:

| x[n] | = sin(ω0n + φ) | (2.9) |

| x[n] | = cos(ω0n + φ) | (2.10) |

|

a)

|

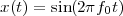

With respect to the oscillatory behavior captured by the complex exponential, a note on the concept of “frequency” is in order. In the continuous-time world (the world of textbook physics, to be clear), where time is measured in seconds, the usual unit of measure for frequency is the Hertz which is equivalent to 1∕second. In the discrete-time world, where the index n represents a dimensionless time, “digital” frequency is expressed in radians which is itself a dimensionless quantity.(1) The best way to appreciate this is to consider an algorithm to generate successive samples of a discrete-time sinusoid at a digital frequency ω0:

|

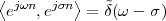

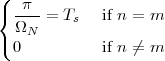

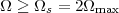

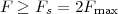

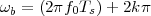

At each iteration,(2) the argument of the trigonometric function is incremented by ω0 and a new output sample is produced. With this in mind, it is easy to see that the highest frequency manageable by a discrete-time system is ωmax = 2π; for any frequency larger than this, the inner 2π-periodicity of the trigonometric functions “maps back” the output values to a frequency between 0 and 2π. This can be expressed as an equation:

| (2.11) |

for all values of k  Z. This 2π-equivalence of digital frequencies

is a pervasive concept in digital signal processing and it has many

important consequences which we will study in detail in the next

Chapters.

Z. This 2π-equivalence of digital frequencies

is a pervasive concept in digital signal processing and it has many

important consequences which we will study in detail in the next

Chapters.

In this Section we present some elementary operations on sequences.

Shift. A sequence x[n], shifted by an integer k is simply:

![y[n] = x [n - k]](livre43x.png) | (2.12) |

If k is positive, the signal is shifted “to the left”, meaning that the signal has been delayed; if k is negative, the signal is shifted “to the right”, meaning that the signal has been advanced. The delay operator can be indicated by the following notation:

![{ }

Dk x [n] = x[n- k]](livre44x.png)

Scaling. A sequence x[n] scaled by a factor α  C is

C is

![y[n] = αx[n]](livre45x.png) | (2.13) |

If α is real, then the scaling represents a simple amplification or attenuation of the signal (when α > 1 and α < 1, respectively). If α is complex, amplification and attenuation are compounded with a phase shift.

Sum. The sum of two sequences x[n] and w[n] is their term-by-term sum:

![y[n ] = x[n]+ w [n ]](livre46x.png) | (2.14) |

Please note that sum and scaling are linear operators. Informally, this means scaling and sum behave “intuitively”:

![( )

α x[n ]+ w[n] = αx[n]+ αw [n]](livre47x.png)

or

![Dk {x[n]+ w [n ]} = x[n - k]+ w[n - k]](livre48x.png)

Product. The product of two sequences x[n] and w[n] is their term-by-term product

![y[n] = x[n]w[n]](livre49x.png) | (2.15) |

Integration. The discrete-time equivalent of integration is expressed by the following running sum:

![∑n

y[n] = x[k]

k= -∞](livre50x.png) | (2.16) |

Intuitively, integration computes a non-normalized running average of the discrete-time signal.

Differentiation. A discrete-time approximation to differentiation is the first-order difference:(3)

![y[n] = x [n]- x[n - 1]](livre51x.png) | (2.17) |

With respect to Section 2.1.2, note how the unit step can be obtained by applying the integration operator to the discrete-time impulse; conversely, the impulse can be obtained by applying the differentiation operator to the unit step.

The signal reproducing formula is a simple application of the basic signal and signal properties that we have just seen and it states that

![∑∞

x[n ] = x[k]δ[n- k]

k=-∞](livre52x.png) | (2.18) |

Any signal can be expressed as a linear combination of suitably weighed and shifted impulses. In this case, the weights are nothing but the signal values themselves. While self-evident, this formula will reappear in a variety of fundamental derivations since it captures the “inner structure” of a discrete-time signal.

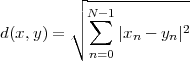

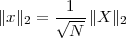

We define the energy of a discrete-time signal as

![∞∑

Ex = ∥x∥2= ||x [n]||2

2 n=-∞](livre53x.png) | (2.19) |

(where the squared-norm notation will be clearer after the next Chapter). This definition is consistent with the idea that, if the values of the sequence represent a time-varying voltage, the above sum would express the total energy (in joules) dissipated over a 1Ω-resistor. Obviously, the energy is finite only if the above sum converges, i.e. if the sequence x[n] is square-summable. A signal with this property is sometimes referred to as a finite- energy signal. For a simple example of the converse, note that a periodic signal which is not identically zero is not square-summable.

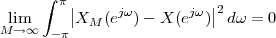

We define the power of a signal as the usual ratio of energy over time, taking the limit over the number of samples considered:

![N -1

-1-∑ || ||2

Px = Nlim→∞ 2N x [n]

-N](livre54x.png) | (2.20) |

Clearly, signals whose energy is finite, have zero total power (i.e. their energy dilutes to zero over an infinite time duration). Exponential sequences which are not decaying (i.e. those for which |a| > 1 in (2.7)) possess infinite power (which is consistent with the fact that they describe an unstable behavior). Note, however, that many signals whose energy is infinite do have finite power and, in particular, periodic signals (such as sinusoids and combinations thereof). Due to their periodic nature, however, the above limit is undetermined; we therefore define their power to be simply the average energy over a period. Assuming that the period is N samples, we have

![N∑ -1| |

Px = 1- |x [n]|2

N n=0](livre55x.png) | (2.21) |

The examples of discrete-time signals in (2.1) and (2.2) are two-sided, infinite sequences. Of course, in the practice of signal processing, it is impossible to deal with infinite quantities of data: for a processing algorithm to execute in a finite amount of time and to use a finite amount of storage, the input must be of finite length; even for algorithms that operate on the fly, i.e. algorithms that produce an output sample for each new input sample, an implicit limitation on the input data size is imposed by the necessarily limited life span of the processing device.(4) This limitation was all too apparent in our attempts to plot infinite sequences as shown in Figure 2.1 or 2.2: what the diagrams show, in fact, is just a meaningful and representative portion of the signals; as for the rest, the analytical description remains the only reference. When a discrete-time signal admits no closed-form representation, as is basically always the case with real-world signals, its finite time support arises naturally because of the finite time spent recording the signal: every piece of music has a beginning and an end, and so did every phone conversation. In the case of the sequence representing the Dow Jones index, for instance, we basically cheated since the index did not even exist for years before 1884, and its value tomorrow is certainly not known – so that the signal is not really a sequence, although it can be arbitrarily extended to one. More importantly (and more often), the finiteness of a discrete-time signal is explicitly imposed by design since we are interested in concentrating our processing efforts on a small portion of an otherwise longer signal; in a speech recognition system, for instance, the practice is to cut up a speech signal into small segments and try to identify the phonemes associated to each one of them.(5) A special case is that of periodic signals; even though these are bona-fide infinite sequences, it is clear that all information about them is contained in just one period. By describing one period (graphically or otherwise), we are, in fact, providing a full description of the sequence. The complete taxonomy of the discrete-time signals used in the book is the subject of the next Sections ans is summarized in Table 2.1.

As we just mentioned, a finite-length discrete-time signal of length N are just a collection of N complex values. To introduce a point that will reappear throughout the book, a finite-length signal of length N is entirely equivalent to a vector in CN. This equivalence is of immense import since all the tools of linear algebra become readily available for describing and manipulating finite-length signals. We can represent an N-point finite-length signal using the standard vector notation

![x = [x x ... x ]T

0 1 N -1](livre56x.png)

Note the transpose operator, which declares x as a column vector; this is the customary practice in the case of complex-valued vectors. Alternatively, we can (and often will) use a notation that mimics the one used for proper sequences:

![x[n ], n = 0, ..., N - 1](livre57x.png)

Here we must remember that, although we use the notation x[n], x[n] is not defined for values outside its support, i.e. for n < 0 or for n ≥ N. Note that we can always obtain a finite-length signal from an infinite sequence by simply dropping the sequence values outside the indices of interest. Vector and sequence notations are equivalent and will be used interchangeably according to convenience; in general, the vector notation is useful when we want to stress the algorithmic or geometric nature of certain signal processing operations. The sequence notation is useful in stressing the algebraic structure of signal processing.

Finite-length signals are extremely convenient entities: their energy is always and, as a consequence, no stability issues arise in processing. From the computational point of view, they are not only a necessity but often the cornerstone of very efficient algorithmic design (as we will see, for instance, in the case of the FFT); one could say that all “practical” signal processing lives in CN. It would be extremely awkward, however, to develop the whole theory of signal processing only in terms of finite-length signals; the asymptotic behavior of algorithms and transformations for infinite sequences is also extremely valuable since a stability result proven for a general sequence will hold for all finite-length signals too. Furthermore, the notational flexibility which infinite sequences derive from their function-like definition is extremely practical from the point of view of the notation. We can immediately recognize and understand the expression x[n - k] as a k-point shift of a sequence x[n]; but, in the case of finite-support signals, how are we to define such a shift? We would have to explicitly take into account the finiteness of the signal and the associated “border effects”, i.e. the behavior of operations at the edges of the signal. For this reason, in most derivations which involve finite-length signal, these signals will be embedded into proper sequences, as we will see shortly.

Aperiodic Signals. The most general type of discrete-time signal is represented by a generic infinite complex sequence. Although, as previously mentioned, they lie beyond our processing and storage capabilities, they are invaluably useful as a generalization in the limit. As such, they must be handled with some care when it comes to their properties. We will see shortly that two of the most important properties of infinite sequences concern their summability: this can take the form of either absolute summability (stronger condition) or square summability (weaker condition, corresponding to finite energy).

|

|

Periodic Signals. A periodic sequence with period N is one for which

![˜x[n] = ˜x[n + kN ], k ∈ Z](livre62x.png) | (2.22) |

The tilde notation  [n] will be used whenever we need to explicitly stress

a periodic behavior. Clearly an N-periodic sequence is completely

defined by its N values over a period; that is, a periodic sequence

“carries no more information” than a finite-length signal of length

N.

[n] will be used whenever we need to explicitly stress

a periodic behavior. Clearly an N-periodic sequence is completely

defined by its N values over a period; that is, a periodic sequence

“carries no more information” than a finite-length signal of length

N.

Periodic Extensions. Periodic sequences are infinite in length, and yet their information is contained within a finite number of samples. In this sense, periodic sequences represent a first bridge between finite-length signals and infinite sequences. In order to “embed” a finite-length signal x[n], n = 0,…,N - 1 into a sequence, we can take its periodized version:

![˜x[n] = x[n mod N ], n ∈ Z-](livre64x.png) | (2.23) |

this is called the periodic extension of the finite length signal x[n]. This

type of extension is the “natural” one in many contexts, for reasons

which will be apparent later when we study the frequency-domain

representation of discrete-time signals. Note that now an arbitrary

shift of the periodic sequence corresponds to the periodization of

a circular shift of the original finite-length signal. A circular shift

by k  Z is easily visualized by imagining a shift register; if we are

shifting towards the right (k > 0), the values which pop out of the

rightmost end of the shift register are pushed back in at the other

end.(6)

The relationship between the circular shift of a finite-length signal and

the linear shift of its periodic extension is depicted in Figure 2.6.

Finally, the energy of a periodic extension becomes infinite, while its

power is simply the energy of the finite-length original signal scaled by

1∕N.

Z is easily visualized by imagining a shift register; if we are

shifting towards the right (k > 0), the values which pop out of the

rightmost end of the shift register are pushed back in at the other

end.(6)

The relationship between the circular shift of a finite-length signal and

the linear shift of its periodic extension is depicted in Figure 2.6.

Finally, the energy of a periodic extension becomes infinite, while its

power is simply the energy of the finite-length original signal scaled by

1∕N.

Finite-Support Signals. An infinite discrete-time sequence  [n] is said to

have finite support if its values are zero for all indices outside of an interval;

that is, there exist N and M

[n] is said to

have finite support if its values are zero for all indices outside of an interval;

that is, there exist N and M  Z such that

Z such that

![¯x[n] = 0 for n < M and n > M + N - 1](livre66x.png)

Note that, although  [n] is an infinite sequence, the knowledge of M and of

the N nonzero values of the sequence completely specifies the entire signal.

This suggests another approach to embedding a finite-length signal x[n],

n = 0,…,N - 1, into a sequence, i.e.

[n] is an infinite sequence, the knowledge of M and of

the N nonzero values of the sequence completely specifies the entire signal.

This suggests another approach to embedding a finite-length signal x[n],

n = 0,…,N - 1, into a sequence, i.e.

![{

x[n] if 0 ≤ n < N - 1

¯x[n] = n ∈ Z-

0 otherwise](livre68x.png) | (2.24) |

where we have chosen M = 0 (but any other choice of M could be

used). Note that, here, in contrast to the the periodic extension of

x[n], we are actually adding arbitrary information in the form of

the zero values outside of the support interval. This is not without

consequences, as we will see in the following Chapters. In general, we will

use the bar notation  [n] for sequences defined as the finite support

extension of a finite-length signal. Note that, now, the shift of the

finite-support extension gives rise to a zero-padded shift of the signal

locations between M and M + N - 1; the dynamics of the shift are shown in

Figure 2.7.

[n] for sequences defined as the finite support

extension of a finite-length signal. Note that, now, the shift of the

finite-support extension gives rise to a zero-padded shift of the signal

locations between M and M + N - 1; the dynamics of the shift are shown in

Figure 2.7.

|

|

Example 2.1: Discrete-time in the Far West

The fact that the “fastest” digital frequency is 2π can be readily appreciated in old western movies. In classic scenarios there is always a sequence showing a stagecoach leaving town. We can see the spoked wagon wheels starting to turn forward faster and faster, then stop and then starting to turn backwards. In fact, each frame in the movie is a snapshot of a spinning disk with increasing angular velocity. The filming process therefore transforms the wheel’s movement into a sequence of discrete-time positions depicting a circular motion with increasing frequency. When the speed of the wheel is such that the time between frames covers a full revolution, the wheel appears to be stationary: this corresponds to the fact that the maximum digital frequency ω = 2π is undistinguishable from the slowest frequency ω = 0. As the speed of the real wheel increases further, the wheel on film starts to move backwards, which corresponds to a negative digital frequency. This is because a displacement of 2π + α between successive frames is interpreted by the brain as a negative displacement of α: our intuition always privileges the most economical explanation of natural phenomena.

Example 2.2: Building periodic signals

Given a discrete-time signal x[n] and an integer N > 0 we can always formally write

![∞∑

y˜[n] = x[n - kN ]

k=-∞](livre75x.png)

The signal ỹ[n], if it exists, is an N-periodic sequence. The periodic signal ỹ[n] is “manufactured” by superimposing infinite copies of the original signal x[n] spaced N samples apart. We can distinguish three cases:

|

|

The first two cases are illustrated in Figure 2.8. In practice, the periodization of short sequences is an effective method to synthesize the sound of string instruments such as a guitar or a piano; used in conjunction with simple filters, the technique is known as the Karplus-Strong algorithm.

As an example of the last type, take for instance the signal x[n] = α-n u[n]. The periodization formula leads to

| ỹ[n] | = ∑ k=-∞∞α-(n-kN)u[n - kN] = ∑ k=-∞⌊n∕N⌋α-(n-kN) |

| ỹ[n] | = ∑ k=-∞mα-(m-k)N-i = α-i ∑ h=0∞α-hN |

![-(n mod N)

˜y[n ] = α----------

1 - α-N](livre80x.png) | (2.25) |

which is indeed N-periodic. An example is shown in Figure 2.9.

|

|

For more discussion on discrete-time signals, see Discrete-Time Signal Processing, by A. V. Oppenheim and R. W. Schafer (Prentice-Hall, last edition in 1999), in particular Chapter 2.

Other books of interest include: B. Porat, A Course in Digital Signal Processing (Wiley, 1997) and R. L. Allen and D. W. Mills’ Signal Analysis (IEEE Press, 2004).

Exercise 2.1: Review of complex numbers.

+ j

+ j . Compute ∑

n=1∞s[n].

. Compute ∑

n=1∞s[n].

n.

n.

Exercise 2.2: Periodic signals. For each of the following discrete-time signals, state whether the signal is periodic and, if so, specify the period:

| © Presses polytechniques et universitaires romandes, 2008 All rights reserved |

In the 17th century, algebra and geometry started to interact in a fruitful synergy which continues to the present day. Descartes’s original idea of translating geometric constructs into algebraic form spurred a new line of attack in mathematics; soon, a series of astonishing results was produced for a number of problems which had long defied geometrical solutions (such as, famously, the trisection of the angle). It also spearheaded the notion of vector space, in which a geometrical point could be represented as an n-tuple of coordinates; this, in turn, readily evolved into the theory of linear algebra. Later, the concept proved useful in the opposite direction: many algebraic problems could benefit from our innate geometrical intuition once they were cast in vector form; from the easy three-dimensional visualization of concepts such as distance and orthogonality, more complex algebraic constructs could be brought within the realm of intuition. The final leap of imagination came with the realization that the concept of vector space could be applied to much more abstract entities such as infinite-dimensional objects and functions. In so doing, however, spatial intuition could be of limited help and for this reason, the notion of vector space had to be formalized in much more rigorous terms; we will see that the definition of Hilbert space is one such formalization.

Most of the signal processing theory which in this book can be usefully cast in terms of vector notation and the advantages of this approach are exactly what we have just delineated. Firstly of all, all the standard machinery of linear algebra becomes immediately available and applicable; this greatly simplifies the formalism used in the mathematical proofs which will follow and, at the same time, it fosters a good intuition with respect to the underlying principles which are being put in place. Furthermore, the vector notation creates a frame of thought which seamlessly links the more abstract results involving infinite sequences to the algorithmic reality involving finite-length signals. Finally, on the practical side, vector notation is the standard paradigm for numerical analysis packages such as Matlab; signal processing algorithms expressed in vector notation translate to working code with very little effort.

In the previous Chapter, we established the basic notation for the different classes of discrete-time signals which we will encounter time and again in the rest of the book and we hinted at the fact that a tight correspondence can be established between the concept of signal and that of vector space. In this Chapter, we pursue this link further, firstly by reviewing the familiar Euclidean space in finite dimensions and then by extending the concept of vector space to infinite-dimensional Hilbert spaces.

Euclidean geometry is a straightforward formalization of our spatial sensory experience; hence its cornerstone role in developing a basic intuition for vector spaces. Everybody is (or should be) familiar with Euclidean geometry and the natural “physical” spaces like R2 (the plane) and R3 (the three-dimensional space). The notion of distance is clear; orthogonality is intuitive and maps to the idea of a “right angle”. Even a more abstract concept such as that of basis is quite easy to contemplate (the standard coordinate concepts of latitude, longitude and height, which correspond to the three orthogonal axes in R3). Unfortunately, immediate spatial intuition fails us for higher dimensions (i.e. for RN with N > 3), yet the basic concepts introduced for R3 generalize easily to RN so that it is easier to state such concepts for the higher-dimensional case and specialize them with examples for N = 2 or N = 3. These notions, ultimately, will be generalized even further to more abstract types of vector spaces. For the moment, let us review the properties of RN, the N-dimensional Euclidean space.

Vectors and Notation. A point in RN is specified by an N-tuple of coordinates:(1)

![⌊ ⌋

| x0 |

|| x1 ||

x = | . | = [x0 x1 ... xN -1]T

|⌈ .. |⌉

xN -1](livre88x.png)

where xi  R, i = 0,1,…,N - 1. We call this set of coordinates a

vector and the N-tuple will be denoted synthetically by the symbol x;

coordinates are usually expressed with respect to a “standard” orthonormal

basis.(2)

The vector

R, i = 0,1,…,N - 1. We call this set of coordinates a

vector and the N-tuple will be denoted synthetically by the symbol x;

coordinates are usually expressed with respect to a “standard” orthonormal

basis.(2)

The vector

![0 = [ ]T

0 0 ... 0](livre89x.png)

i.e. the null vector, is considered the origin of the coordinate system.

The generic n-th element in vector x is indicated by the subscript xn. In

the following we will often consider a set of M arbitrarily chosen vectors in

RN and this set will be indicated by the notation  x(k)

x(k) k=0 …M-1.

Each vector in the set is indexed by the superscript ⋅(k). The n-th

element of the k-th vector in the set is indicated by the notation

xn(k)

k=0 …M-1.

Each vector in the set is indexed by the superscript ⋅(k). The n-th

element of the k-th vector in the set is indicated by the notation

xn(k)

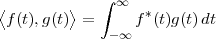

Inner Product. The inner product between two vectors x,y  RN is

defined as

RN is

defined as

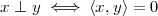

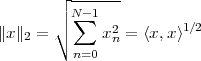

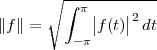

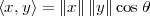

| (3.1) |

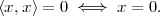

We say that x and y are orthogonal when their inner product is zero:

| (3.2) |

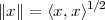

Norm. The norm of a vector is defined in terms of the inner product as

| (3.3) |

It is easy to visualize geometrically that the norm of a vector corresponds to

its length, i.e. to the distance between the origin and the point identified by

the vector’s coordinates. A remarkable property linking the inner product

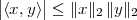

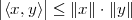

and the norm is the Cauchy-Schwarz inequality (the proof of which is

nontrivial); given x,y  RN we can state that

RN we can state that

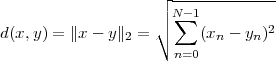

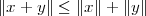

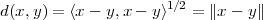

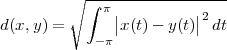

Distance. The concept of norm is used to introduce the notion of Euclidean distance between two vectors x and y:

| (3.4) |

|

|

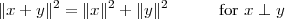

From this, we can easily derive the Pythagorean theorem for N dimensions: if two vectors are orthogonal, x ⊥ y, and we consider the sum vector z = x + y, we have

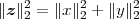

| (3.5) |

The above properties are graphically shown in Figure 3.1 for R2.

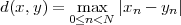

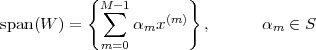

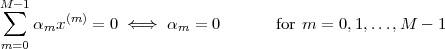

Bases. Consider a set of M arbitrarily chosen vectors in RN: {x(k)}k=0…M-1.

Given such a set, a key question is that of completeness: can any

vector in RN be written as a linear combination of vectors from the

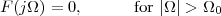

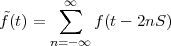

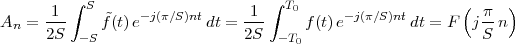

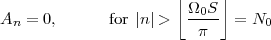

set? In other words, we ask ourselves whether, for any z  RN, we